Thanks to the contribution of hundreds of developers from KubeVela community and around 500 PRs from more than 30 contributors, KubeVela version 1.3 is officially released. Compared to v1.2 released three months ago, this version provides a large number of new features in three aspects as OAM engine (Vela Core), GUI dashboard (VelaUX) and addon ecosystem. These new features are derived from the in-depth practice of many end users such as Alibaba, LINE, China Merchants Bank, and iQiyi, and then finally become part of the KubeVela project that everyone can use out of the box.

Pain Points of Application Delivery

So, what challenges have we encountered in cloud-native application delivery?

Hybrid clouds and multi-clusters is the new norm

On one hand, as global cloud providers' service maturing, the way most enterprises build infrastructure has become mainly replying on cloud providers and self-built as a supplement. More and more business enterprise can directly enjoy the business convenience brought by the development of cloud technology, use the elasticity of the cloud, and reduce the cost of self-built infrastructure. Enterprises need a standardized application delivery layer, which can include containers, cloud services and various self-built services in a unified manner, so as to easily achieve cloud-to-cloud interoperability, reduce the risks brought by tedious application migration, and worry-free cloud migration.

On the other hand, for security concerns such as infrastructure stability and multi-environment isolation and due to limitations by the maximized size of Kubernetes can handle, more and more enterprises are beginning to adopt multiple Kubernetes clusters to manage container workloads. How to manage and orchestrate container applications at the multi-cluster level, and solve problems such as scheduling, dependencies, versions, and gray releasing, while providing business developers with a low-threshold experience, is a big challenge.

It can be seen that the hybrid cloud and multi-cluster involved in modern application delivery are not only multiple Kubernetes clusters, but also diverse workloads and DevOps capabilities for managing cloud services, SaaS, and self-built services.

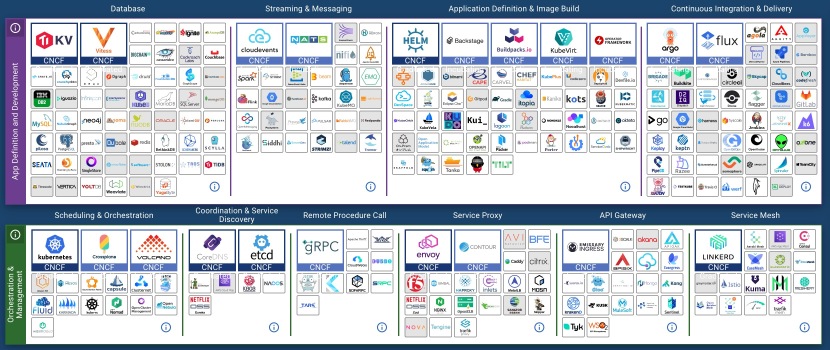

How to pick from more than 1000+ techniques in cloud-native era

Let's take the open-source projects that have joined the CNCF ecosystem as an example, the number of which has exceeded 1,000. For teams of different scales, different industries, and different technical backgrounds, it seems that the R&D team is doing similar business application delivery and management, but with changes in requirements and usage scenarios, huge differences in technology stacks will be derived. This involves a very large learning cost and threshold for integration and migration. And CNCF's thousands of ecological projects are always tempting us to integrate new projects, add new features, and better accomplish business goals. The era of static technology stacks is long gone.

Figure 1. CNCF landscape

Figure 1. CNCF landscape

Next-generation application delivery and management require flexible assembly capabilities. According to the needs of the team, based on the minimum capability set, new functions can be expanded at a small cost, but not significantly enlarged. The traditional PaaS solution based only on a set of fixating experiences has been proven to be difficult to meet the changing scenario needs of a team during product evolution.

Next step of DevOps, delivering and managing applications for diverse infrastructures

For more than a decade, DevOps technology has been evolving to increase productivity. Today, the production process of business applications has also undergone great changes, from the traditional way of coding, testing, packaging, deployment, maintenance, and observation, to the continuous enhancement of cloud infrastructure meaning various SaaS services based on API directly become an integral part of the application. From the diversification of development languages to the diversification of deployment environments, to the diversification of components, the traditional DevOps toolchain is gradually unable to cope with and meanwhile, the complexity of user needs is increasing exponentially.

Although DevOps prolongs, we need some different solutions. For modern application delivery and management, we still have the same pursuit of reducing human input as much as possible and becoming more intelligent. The new generation of DevOps technology needs to have easier-to-use integration capabilities, service mesh capabilities, and management capabilities that integrate observation and maintenance. At the same time, the tools need to be simple and easy to use, and the complexity stays within the platform. When choosing, enterprises can combine their own business needs, cooperate with the new architecture and legacy systems, and assemble a platform solution suitable for their team, to avoid the new platform becoming a burden for business developers or enterprises.

The Path of KubeVela Lies Ahead

To build the next generation application delivery platform, we do:

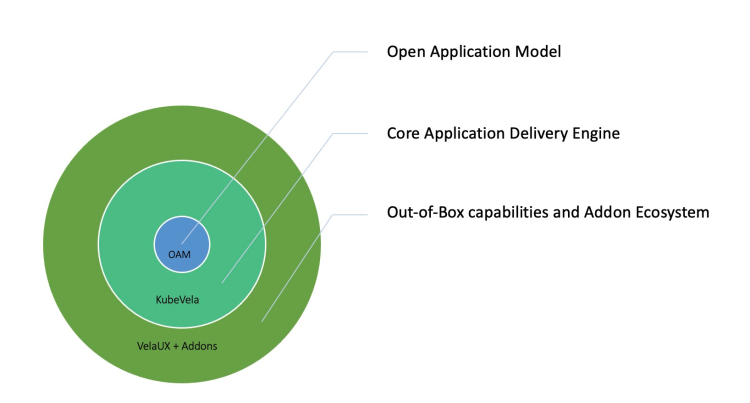

Figure 2. Overlook of OAM/KubeVela ecosystem

Figure 2. Overlook of OAM/KubeVela ecosystem

OAM(Open Application Model): evolving methodology in fast pacing practice

Based on the internal practical experience of Alibaba and Microsoft, we launched OAM, a brand-new application model and concept in 2019. Its core idea lies in the separation of concerns, through the unified abstraction of components and traits, it can standardize business research and development in the cloud-native era. Collaboration between development team and DevOps team becomes more efficient, and at the same time we expect to avoid the complexity caused by differences in different infrastructures. We then released KubeVela as a standardized implementation of the OAM model to help companies quickly implement OAM while ensuring that OAM-compliant applications can run anywhere. In short, OAM describes the complete components of a modern application in a declarative way, while KubeVela runs according to the final state declared by OAM. Through the reconcile loop oriented to the final state, the two jointly ensure the consistency and correctness of application delivery.

Recently, we have seen a paper published by Google announcing the results of its internal learning in infrastructure construction named as "Prodspec and Annealing". Its design concept and practice are strikingly similar to "OAM and KubeVela". It can be seen that different enterprises in global shares the same vision for delivering cloud-native applications. This paper also re-confirm the correctness of the standardized model and KubeVela. In the future, we will continue to promote the development of the OAM model based on the community's practice and evolution of KubeVela, and continue to deposit best practices into methodology.

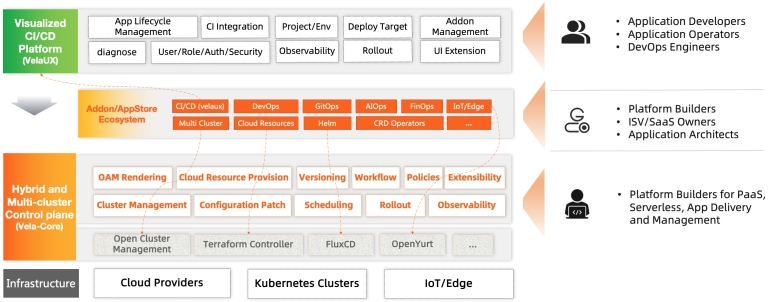

A universal hybrid environment and multi-cluster delivery control plane

The kernel of KubeVela exists in the form of a CRD Controller, which can be easily integrated with the Kubernetes ecosystem, and the OAM model is also compatible with the Kubernetes API. In addition to the abstraction and orchestration capabilities of the OAM model, KubeVela's microkernel is also a natural application delivery control plane designed for multi-cluster and hybrid cloud environments. This also means that KubeVela can seamlessly connect diverse workloads such as cloud resources and containers, and orchestrate and deliver them in different clouds and clusters.

In addition to the basic orchestration capabilities, one core feature of KubeVela is that it allows users to customize the delivery workflow. The workflow steps provide deploying components to the cluster, setting up manual approval, sending notifications, etc. When the workflow execution enters a stable state (such as waiting for manual approval), KubeVela will also automatically maintain the state. Or, through the CUE-based configuration language, you can integrate any IaC-based process, such as Kubernetes CRD, SaaS API, Terraform module, image script, etc. KubeVela's IaC extensibility enables it to integrate Kubernetes' ecological technology at a meager cost. It is very quickly for platform builders to incorporate into their own PaaS or delivery systems. Also, through KubeVela's powerful extensibility, other ecological capabilities can be standardized for enterprise users.

In addition to the advanced model and extended kernel, we've also heard a lot from the community to call out an out-of-the-box product that makes using KubeVela easier. Since version 1.2, the community has invested in developing the GUI dashboard (VelaUX) project, based on KubeVela's microkernel, running on top of the OAM model and creating a delivery platform for CI/CD scenarios. We hope that enterprises can swiftly adopt VelaUX to meet business needs and have a robust, extensible ability to meet the needs of future businesses.

Figure 3. Product architecture of KubeVela

Figure 3. Product architecture of KubeVela

Around this path, in version 1.3, the community brought the following updates:

Enhancement as a Kubernetes Multi-Cluster Control Plane

No migration and switch to multi-cluster seamlessly

After the enterprise has completed the application transformation to a cloud-native architecture, is it still necessary to perform configuration transformation when switching to multi-cluster deployment? The answer is negative.

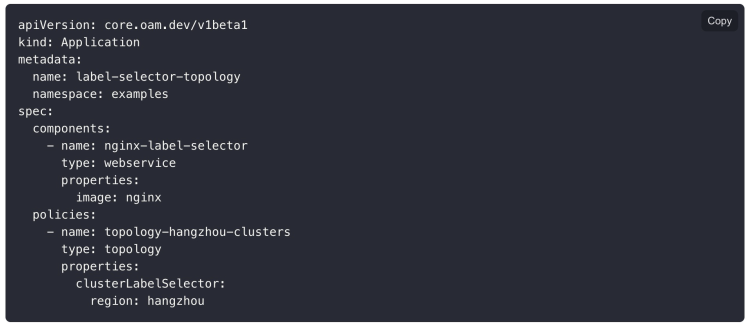

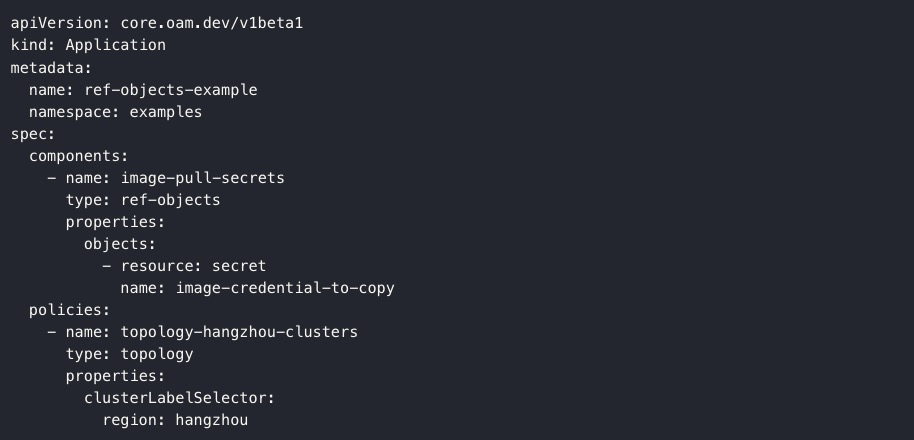

KubeVela is naturally built upon a multi-cluster basis. As shown in Figure 4, this application YAML represents an application of the Nginx component that will be published to all clusters labeled as region=hangzhou. For the same application description, we only need to specify the name of the cluster to be delivered in Policy or filter specific collections by tags.

Figure 4. OAM application - select deployment cluster

Figure 4. OAM application - select deployment cluster

Of course, the application description shown in Figure 4 is entirely based on the OAM specification. If your current application has been defined in Kubernetes native resources, don't worry, we support the smooth transition from it, as shown in Figure 5 below, "Referencing Kubernetes resources for multi-cluster deployment," which describes a particular application whose components depend on a Secret resource that exists in the control cluster and publishes it to all clusters labeled as region=hangzhou.

Figure 5. Reference Kubernetes native resource

Figure 5. Reference Kubernetes native resource

In addition to multi-cluster deployment of applications, referencing Kubernetes objects can also be used in scenarios such as multi-cluster replication of existing resources, cluster data backup, etc.

Handling multi-cluster differences

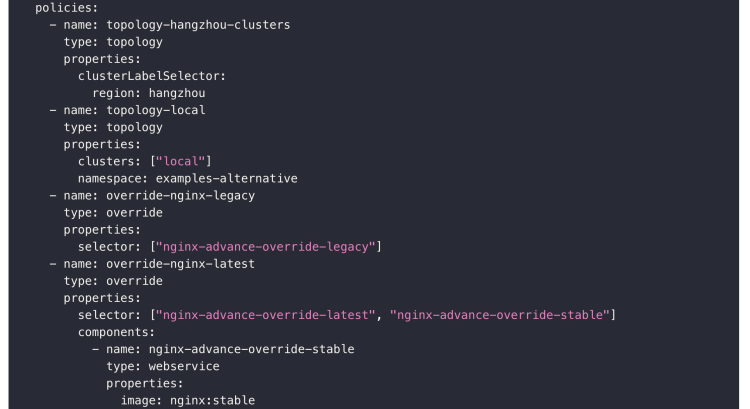

Although the application has been described in a unified OAM Model, there may be differences in the deployment of different clusters. For example, other regions use different environment variables and image registries. Different clusters deploy various components, or a component is deployed in multiple clusters but works as high availability for all, etc. For such requirements, we provide a deployment strategy to do differentiated configuration, as shown in Figure 6 below, as part of this kind of Policy. The first and second topology types of Policy define two target strategies in two ways. The third one means to deploy only the specified components. The fourth Policy represents the deployment of the selected two kinds of components and the difference in the image configuration of one of the components.

Figure 6. Differentiated configuration of multi-clusters

Figure 6. Differentiated configuration of multi-clusters

KubeVela supports flexible differential configuration policies, which can be configured by component properties, traits, and other forms. As shown in the figure above, the third strategy describes the component selection ability, and the fourth strategy describes the difference between the image version. We can see that there is no target specified when describing the difference. The differentiated configuration can be patched flexibly by combining it into the workflow steps.

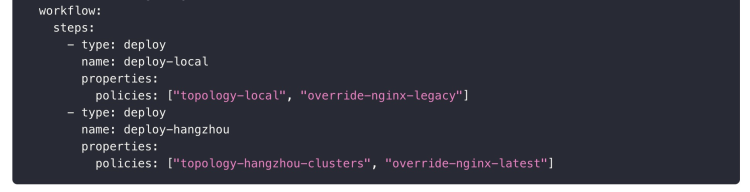

Configure a multi-cluster delivery process

The application delivery process to different target clusters is controllable and described by workflow. As shown in Figure 7, the steps of deploying to two clusters and the target policy and differentiation strategy were adopted, respectively. The above shows that policy deployment only needs to be defined atomically and can be flexibly combined in the workflow steps to meet the requirements of different scenarios.

Figure 7. Customize the multi-cluster delivery process

Figure 7. Customize the multi-cluster delivery process

There are many more usages for delivery workflow, including multi-cluster canary release, manual approval, precise release control, etc.

Version control, safe and traceable

The description of complex applications is changing at any time with agile development. To ensure the security of application release, we need to have the ability to roll back our application to a previous correct state at the time of release or after release. Therefore, we have introduced a more robust versioning mechanism in the current version.

Figure 8. Querying historical version of the application

Figure 8. Querying historical version of the application

We can query every past version of an application, including its release time and whether it was successful or not. We can compare the changes between versions and quickly roll back based on the snapshot rendered by the previous successful version when we encounter a failure during the release. After releasing a new version, you don't need to change the configuration source if it fails. You can directly re-release based on a history version. The version control mechanism is the centralized idea of application configuration management. After the complete description of the application is rendered uniformly, it is checked, stored, and distributed.

See more Vela Core usages

- Multi-cluster application delivery: https://kubevela.io/docs/case-studies/multi-cluster

- References external Kubernetes objects: https://kubevela.io/docs/end-user/components/ref-objects

- Application version management: https://kubevela.io/docs/end-user/version-control

VelaUX Introduces Multi-Tenancy Isolation and User Authentication

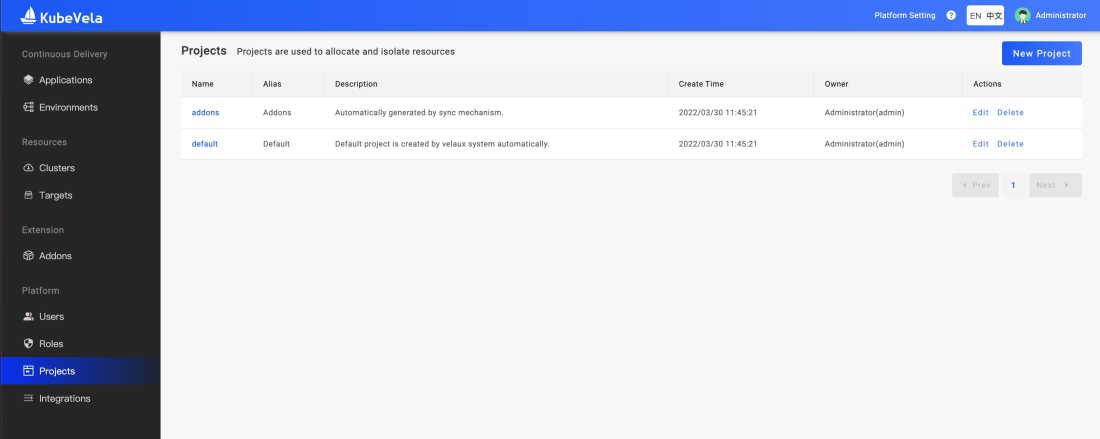

Multi-tenancy and isolation for enterprises

In VelaUX, we introduce the concept of a Project that separate multi-tenancy for safety, including application delivery targets, environments, team members and permissions, etc. Figure 9 shows the project list page. Project administrators can create different projects on this page according to the team's needs to allocate corresponding resources. This capability becomes very important when there are multiple teams or multiple project groups in the enterprise publishing their business applications using the VelaUX platform simultaneously.

Figure 9. Project management page

Figure 9. Project management page

Open Authentication & RBAC

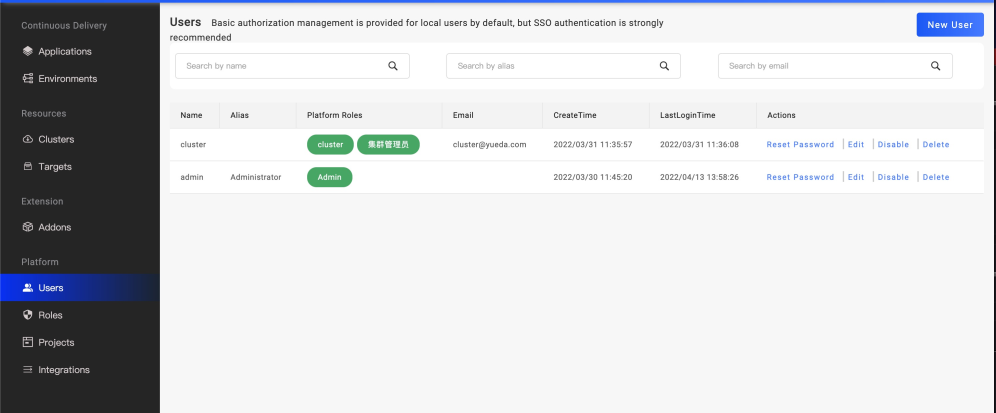

As a vital platform, user authentication is one of the basic capabilities that must be possessed. Since version 1.3, we have supported user authentication and RBAC authentication.

We believe that most enterprises have built a unified authentication platform (Oauth or LDAP) for user authentication. Therefore, VelaUX prioritizes Dex getting through the single sign-on capability, supports LDAP, OIDC, Gitlab/Github, and other user authentication methods, and regards VelaUX as one of the sub portals that let access get through. Of course, if your team does not need unified authentication, we also provide basic local user authentication capabilities.

Figure 10. Local user management

Figure 10. Local user management

For authentication, we use the RBAC model. Still, we also saw that the primary RBAC mode could not handle more precise permission control scenarios, such as authorizing the operation rights of an application to specific users. We inherit the design concept of IAM and expand the permissions to the policy composition of resource + action + condition + behavior. The authentication system (front-end UI authentication/back-end API authentication) has implemented policy-oriented fine-grained authentication. However, in terms of authorization, the current version only has some built-in standard permission policies, and subsequent versions provide the ability to create custom permissions.

At the same time, we have also seen that some large enterprises have built independent IAM platforms. The RBAC data model of VelaUX is the same as that of common IAM platforms. Therefore, users who wish to connect VelaUX to their self-built IAM can extend seamlessly.

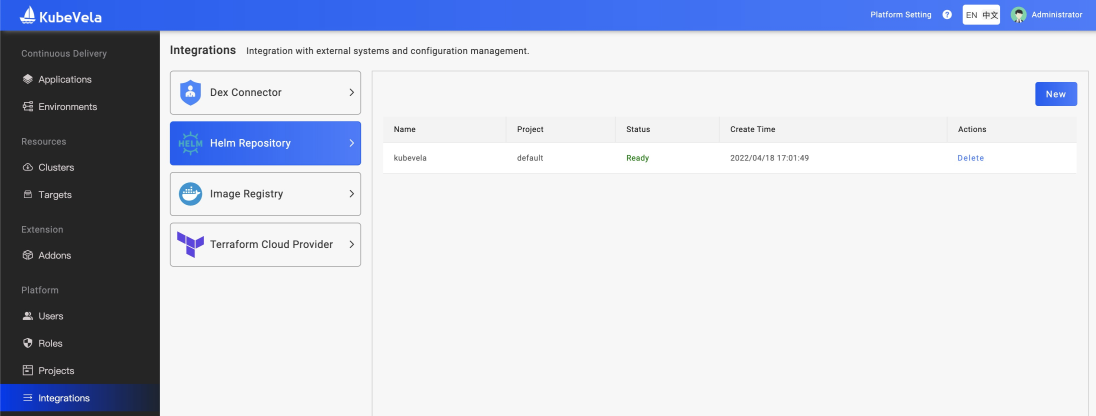

More secure centralized DevOps

There will inevitably be some configuration management of operation and maintenance requirements in application delivery, primarily based on multi-cluster. The configuration management requirements are particularly prominent, such as the authentication configuration of the private image repository, the authentication configuration of the Helm product library, or the SSL certificate Wait. We need to uniformly manage the validity of these configurations and securely synchronize them where they are needed, preferably without business developer awareness.

In version 1.3, we introduced a module for integrated configuration management in VelaUX. Its bottom layer also uses component templates and application resource distribution links to manage and distribute configurations. Currently, Secret is used for configuration storage and distribution. The configuration lifecycle is independent of business applications, and we maintain the configuration distribution process independently in each project. You only need to fill in the configuration information for administrator users according to the configuration template.

Figure 11. Integration configuration

Figure 11. Integration configuration

Various Addons provide different configuration types, and users can define more configuration types according to their needs and manage them uniformly. For business-level configuration management, the community is also planning.

See more VelaUX usages

- Project management: https://kubevela.io/docs/how-to/dashboard/user/project

- User management: https://kubevela.io/docs/how-to/dashboard/user/user

- RBAC authorization: https://kubevela.io/docs/how-to/dashboard/user/rbac

- Use single sign-on: https://kubevela.io/docs/tutorials/sso

- Configuration management: https://kubevela.io/docs/how-to/dashboard/config/dex-connectors

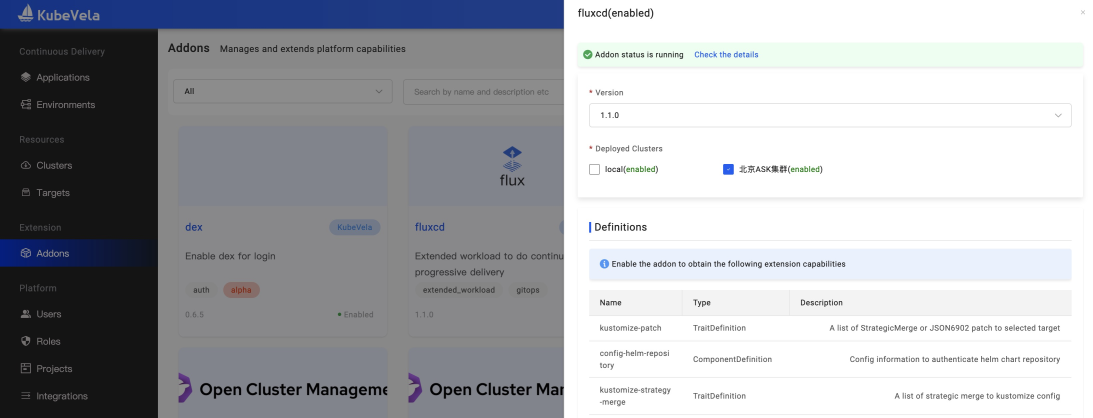

Introducing version control in Addon ecosystem

The Addon function was introduced in version 1.2, providing an extended plug-in specification, installation, operation, and maintenance management capabilities. The community can expand the ecological capacities of KubeVela by making different Addons. When our plug-ins and frameworks are constantly iterating, the problem of version compatibility gradually emerges, and we urgently need a version management mechanism.

- Addon version distribution: We develop and manage the community's official Addon on Github. In addition to the integrated third-party product version, each Addon also includes a Definition and other configurations. Therefore, after each Addon is released, we define it according to its Definition. The version number is packaged, and the history is preserved. At the same time, we reused Helm Chart's product distribution API specification to distribute Addon.

- Addon version distribution: We develop and manage the community's official Addon on Github. In addition to the integrated third-party product version, each Addon also includes a Definition and other configurations. Therefore, after each Addon is released, we define it according to its Definition. The version number is packaged, and the history is preserved. At the same time, we reused Helm Chart's product distribution API specification to distribute Addon.

Multi-cluster Addon controllable installation

A type of Addon needs to be installed in the subcluster when installing, such as the FluxCD plug-in shown in Figure 12, which provides Helm Chart rendering and deployment capabilities. We need to deploy it to sub-clusters, and in the past, this process was distributed to all sub-clusters. However, according to community feedback, different plug-ins do not necessarily need to be installed in all clusters. We need a differential processing mechanism to install extensions to specified clusters on demand.

Figure 12 Addon configuration

Figure 12 Addon configuration

The user can specify the cluster to be deployed when enabling Addon, and the system will deploy the Addon according to the user's configuration.

New members to Addon ecosystem

While iteratively expanding the framework's capabilities, the existing Addons in the community are also continuously being added and upgraded. The number of supported vendors has increased to seven at the cloud service support level. Ecological technology, AI training and service plug-ins, Kruise Rollout plug-ins, Dex plug-ins, etc., have been added. At the same time, the Helm Chart plug-in and the OCM cluster management plug-in have also been updated for user experience.

More Addon usages

- Addon intro:https://kubevela.io/docs/how-to/cli/addon/addon

Recent roadmap

As KubeVela core becomes more and more stable, its scalability is unleashed gradually. The evolution of the 1.2/1.3 version of the community has been accelerated. In the future, we will iterate progressively new versions in a two-month cycle. In the next 1.4 release, we will add the following features:

- Observability: Provide a complete observability solution around logs, metrics, and traces, provide out-of-the-box observability of the KubeVela system, allow custom observability configuration, and integrate existing observability components or cloud resources.

- Offline installation: Provide relatively complete offline installation tools and solutions to facilitate more users to use KubeVela in an offline environment.

- Multi-cluster permission management: Provides in-depth permission management capabilities for Kubernetes multi-cluster.

- More out-of-the-box Addon capabilities.

The KubeVela community is looking forward to your joining to build an easy-to-use and standardized next-generation cloud-native application delivery and management platform!