7 Dec 2020 12:33pm, by Lei Zhang and Fei Guo

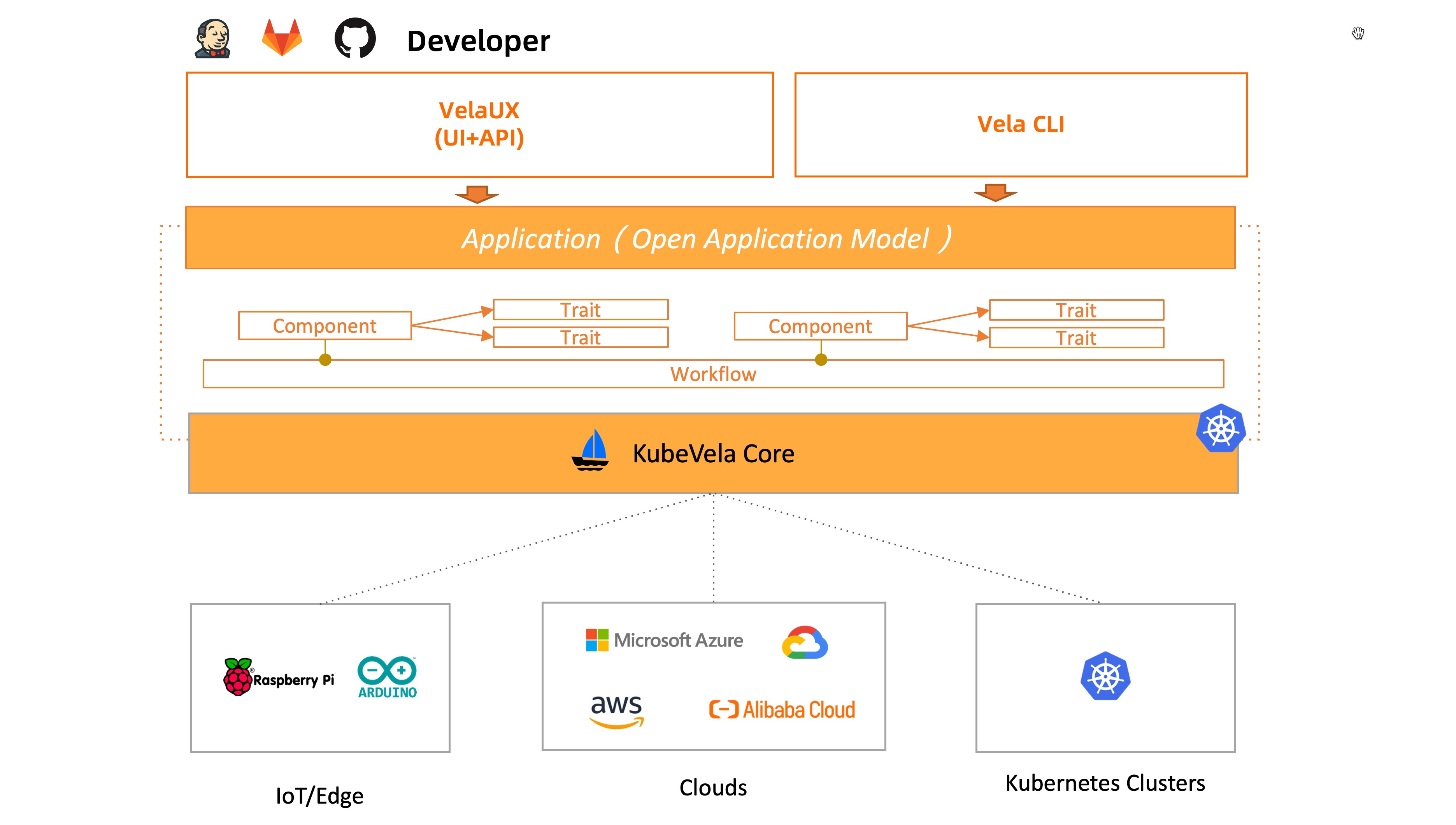

Last month at KubeCon+CloudNativeCon 2020, the Open Application Model (OAM) community launched KubeVela, an easy-to-use yet highly extensible application platform based on OAM and Kubernetes.

For developers, KubeVela is an easy-to-use tool that enables you to describe and ship applications to Kubernetes with minimal effort, yet for platform builders, KubeVela serves as a framework that empowers them to create developer-facing yet fully extensible platforms at ease.

The trend of cloud native technology is moving towards pursuing consistent application delivery across clouds and on-premises infrastructures using Kubernetes as the common abstraction layer. Kubernetes, although excellent in abstracting low-level infrastructure details, does introduce extra complexity to application developers, namely understanding the concepts of pods, port exposing, privilege escalation, resource claims, CRD, and so on. We’ve seen the nontrivial learning curve and the lack of developer-facing abstraction have impacted user experiences, slowed down productivity, led to unexpected errors or misconfigurations in production.

Abstracting Kubernetes to serve developers’ requirements is a highly opinionated process, and the resultant abstractions would only make sense had the decision-makers been the platform builders. Unfortunately, the platform builders today face the following dilemma: There is no tool or framework for them to easily extend the abstractions if any.

Thus, many platforms today introduce restricted abstractions and add-on mechanisms despite the extensibility of Kubernetes. This makes easily extending such platforms for developers’ requirements or to wider scenarios almost impossible.

In the end, developers complain those platforms are too rigid and slow in response to feature requests or improvements. The platform builders do want to help but the engineering effort is daunting: any simple API change in the platform could easily become a marathon negotiation around the opinionated abstraction design.