This article will focus on KubeVela and OpenYurt (two open-source projects of CNCF) and introduce the solution of cloud-edge collaboration in a practical Helm application delivery scenario.

Background

With the popularization of the Internet of Everything scenario, the computing power of edge devices is increasing. It is a new technological challenge to use the advantages of cloud computing to meet complex and diversified edge application scenarios and extend cloud-native technology to the end and edge. Cloud-Edge Collaboration is becoming a new technological focus. This article will focus on KubeVela and OpenYurt (two open-source projects of CNCF) and introduce the solution of cloud-edge collaboration in a practical Helm application delivery scenario.

OpenYurt focuses on extending Kubernetes to edge computing in a non-intrusive manner. Based on the container orchestration and scheduling capabilities of native Kubernetes, OpenYurt integrates edge computing power into the Kubernetes infrastructure for unified management. It provides capabilities (such as edge autonomy, efficient O&M channels, unitized edge management, edge traffic topology, secure containers, and edge Serverless/FaaS) and support for heterogeneous resources. In short, OpenYurt builds a unified infrastructure for cloud-edge collaboration in a Kubernetes-native manner.

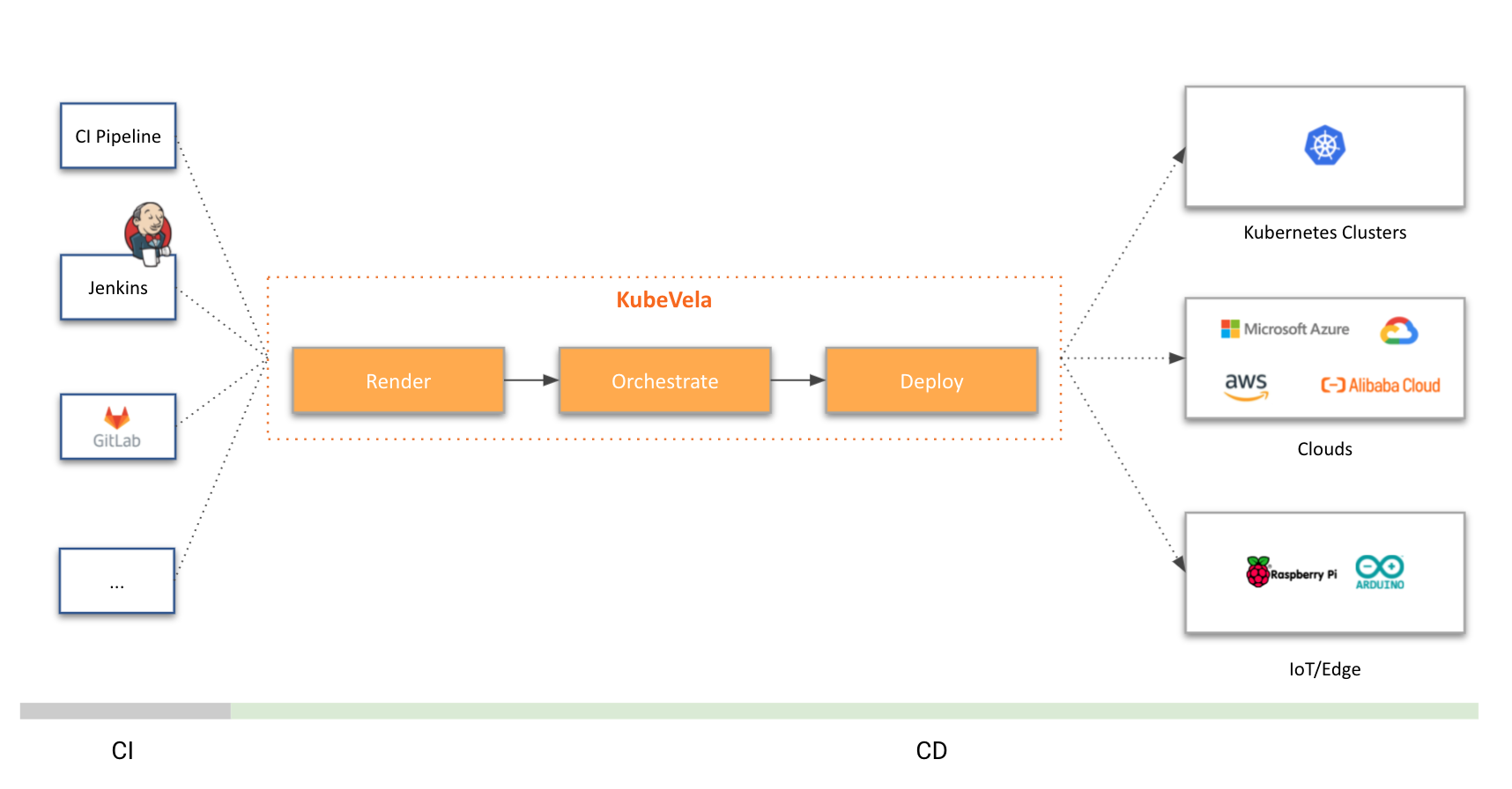

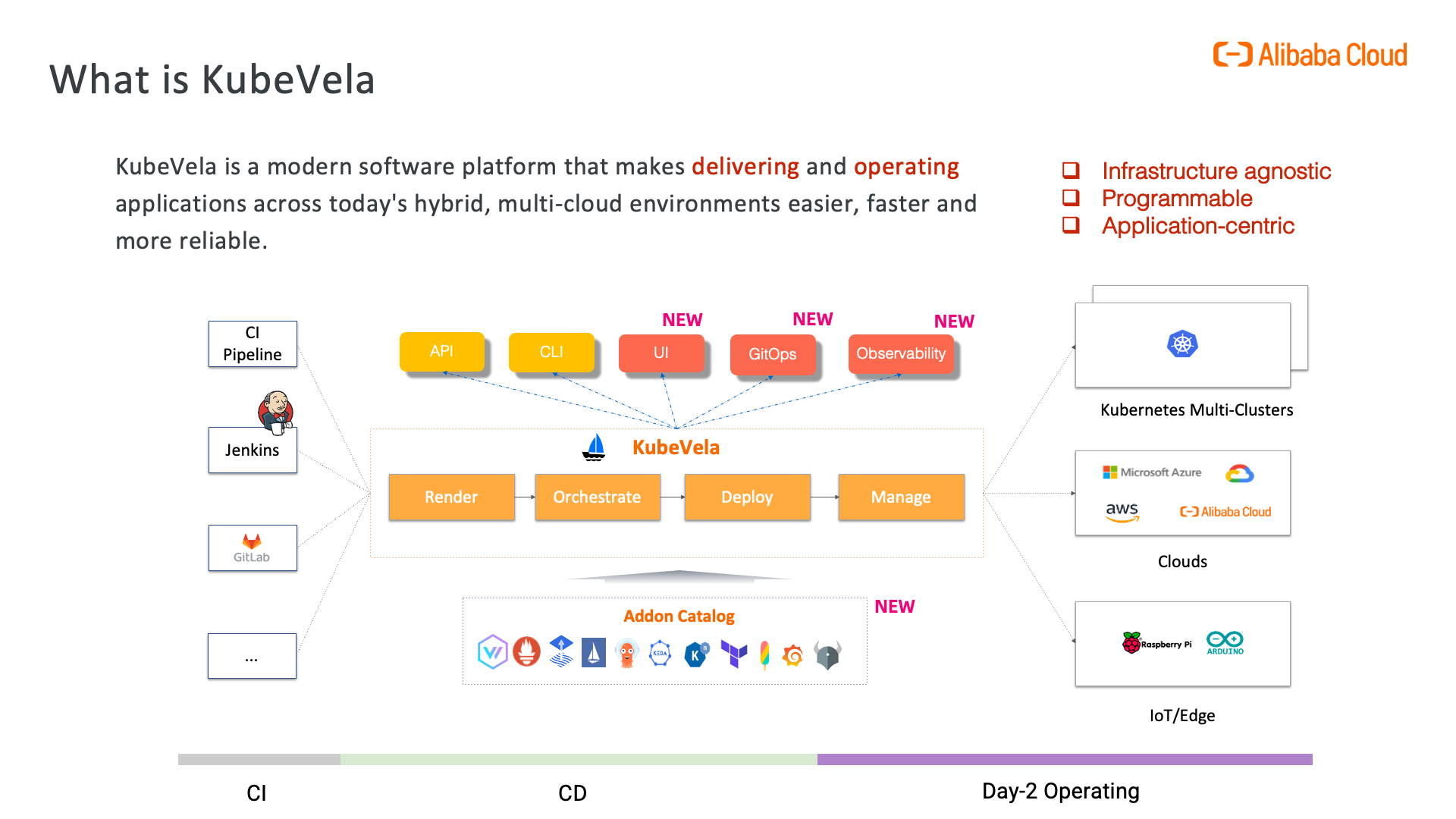

Incubated in the OAM model, KubeVela focuses on helping enterprises build unified application delivery and management capabilities. It shields the complexity of underlying infrastructure for developers and provides flexible scaling capabilities. It also provides out-of-the-box microservice container management, cloud resource management, versioning and canary release, scaling, observability, resource dependency orchestration and data delivery, multi-cluster, CI docking, and GitOps. Maximize the R&D performance of developer self-service application management, which also meets the extensibility demands of the long-term evolution of the platform.

OpenYurt + KubeVela - What Problems Can be Solved?

As mentioned before, OpenYurt supports the access of edge nodes, allowing users to manage edge nodes by operating native Kubernetes. "Edge nodes" are used to represent computing resources closer to users (such as virtual machines or physical servers in a nearby data center). After you add them through OpenYurt, these edge nodes are converted into nodes that can be used in Kubernetes. OpenYurt uses NodePool to describe a group of edge nodes in the same region. After basic resource management is met, we have the following core requirements for how to orchestrate and deploy applications to different NodePools in a cluster.